Kubernetes BGP Connectivity with a UniFi router

Estimated reading time: 9 minutes

In my previous article on building a Kubernetes cluster with Talos Linux, I used a Kubernetes Service of type NodePort to expose a workload to my homelab network. However, exposing workloads using NodePorts is not efficient or standard practice. In this article, I will document how I configured Cilium’s Gateway API as a basic reverse proxy and BGP Control Plane to inject routing paths into the routing table of a UniFi router for the reverse proxy IP address.

Prerequisites

- Unifi UDM SE (or a supported device) running the UniFi OS v4.1+

- 3 node control plane/worker Kubernetes version 1.32 cluster

- Cilium CNI version 1.16.5 installed

- All Kubernetes nodes and pods in a Ready/Running state

- kubectl version 1.32.0 installed locally

Unifi

Create a FRR BGP configuration file for the BGP service in a UniFi router. Adjust router-id to match the IP address of your router. Adjust neighbor to match the IP addresses of the Kubernetes nodes. dev-clus-core is an arbitrary name I used to describe the Kubernetes cluster.

tee bgp.conf << EOF > /dev/null

router bgp 65000

bgp bestpath as-path multipath-relax

no bgp ebgp-requires-policy

bgp router-id 192.168.2.1

neighbor dev-clus-core peer-group

neighbor dev-clus-core remote-as external

neighbor 192.168.110.31 peer-group dev-clus-core

neighbor 192.168.110.32 peer-group dev-clus-core

neighbor 192.168.110.33 peer-group dev-clus-core

EOF

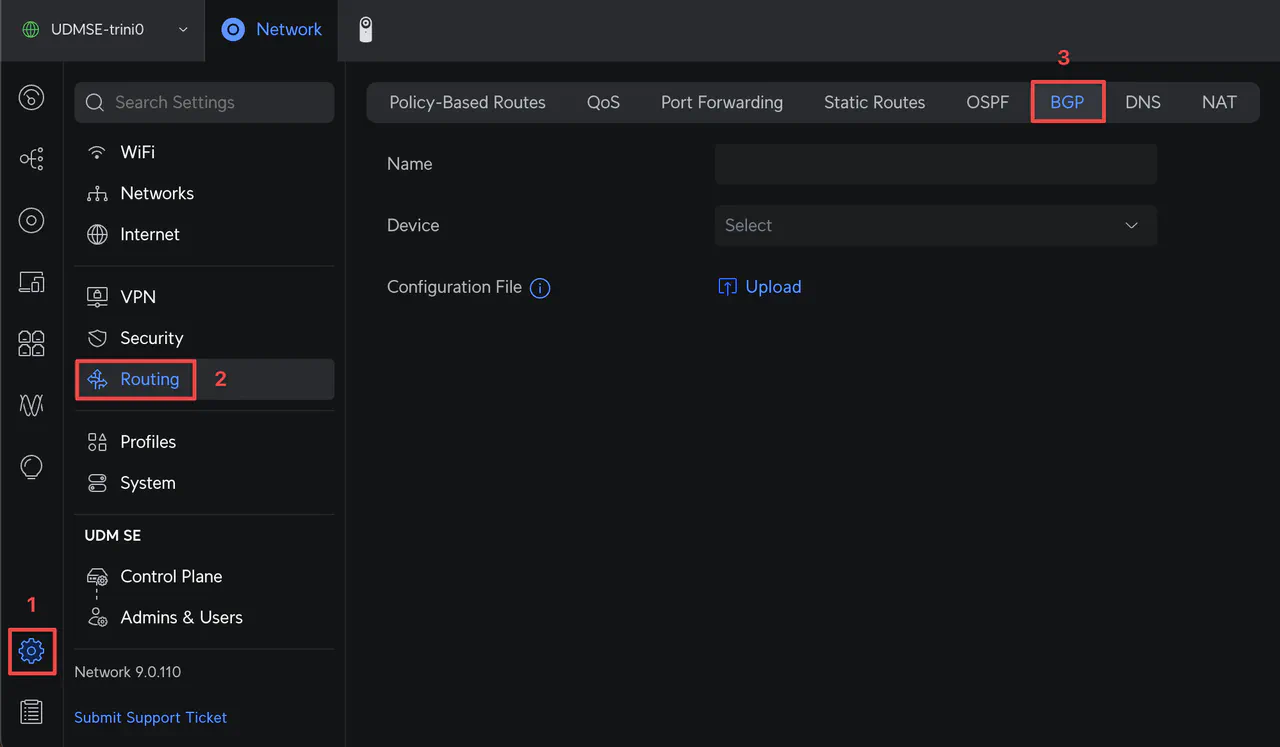

Log into your UniFi Cloud Gateway and go to Settings -> Routing -> BGP.

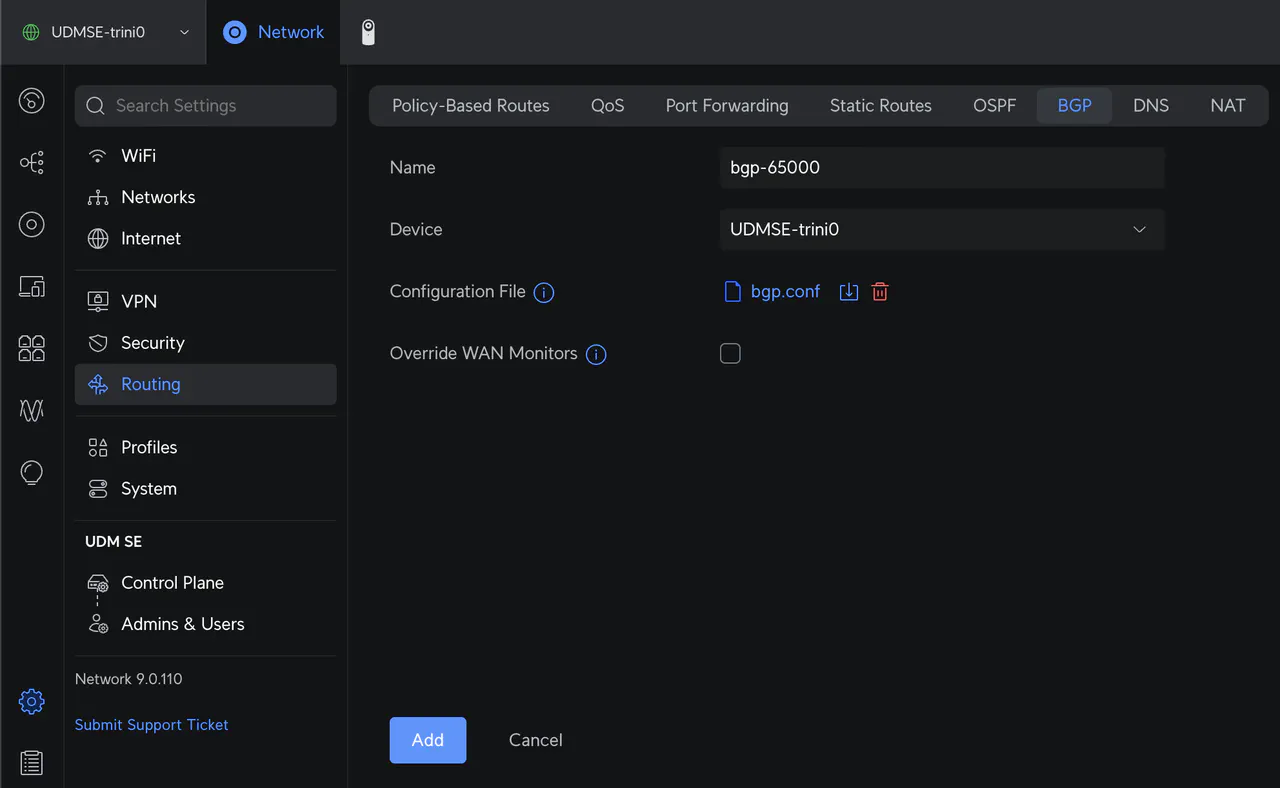

Enter a name for the configuration, select your router, and upload the bgp.conf file created earlier. Click Add

That is it for BGP configuration on a UniFi router. Let me enable Cilium’s BGP Control Plane and Gateway API in Kubernetes.

Cilium BGP Control Plane

Install Cilium’s CLI for MacOS

CILIUM_CLI_VERSION=$(curl -s https://raw.githubusercontent.com/cilium/cilium-cli/main/stable.txt)

CLI_ARCH=amd64

if [ "$(uname -m)" = "arm64" ]; then CLI_ARCH=arm64; fi

curl -L --fail --remote-name-all https://github.com/cilium/cilium-cli/releases/download/${CILIUM_CLI_VERSION}/cilium-darwin-${CLI_ARCH}.tar.gz{,.sha256sum}

shasum -a 256 -c cilium-darwin-${CLI_ARCH}.tar.gz.sha256sum

sudo tar xzvfC cilium-darwin-${CLI_ARCH}.tar.gz /usr/local/bin

rm cilium-darwin-${CLI_ARCH}.tar.gz{,.sha256sum}

Install the required Gateway API CRDs (Custom Resource Definitions).

kubectl apply \

--filename https://raw.githubusercontent.com/kubernetes-sigs/gateway-api/v1.1.0/config/crd/standard/gateway.networking.k8s.io_gatewayclasses.yaml \

--filename https://raw.githubusercontent.com/kubernetes-sigs/gateway-api/v1.1.0/config/crd/standard/gateway.networking.k8s.io_gateways.yaml \

--filename https://raw.githubusercontent.com/kubernetes-sigs/gateway-api/v1.1.0/config/crd/standard/gateway.networking.k8s.io_httproutes.yaml \

--filename https://raw.githubusercontent.com/kubernetes-sigs/gateway-api/v1.1.0/config/crd/standard/gateway.networking.k8s.io_referencegrants.yaml \

--filename https://raw.githubusercontent.com/kubernetes-sigs/gateway-api/v1.1.0/config/crd/standard/gateway.networking.k8s.io_grpcroutes.yaml \

--filename https://raw.githubusercontent.com/kubernetes-sigs/gateway-api/v1.1.0/config/crd/experimental/gateway.networking.k8s.io_tlsroutes.yaml

Enable Cilium’s BGP Control Plane and Gateway API

cilium upgrade --version 1.16.5 \

--set bgpControlPlane.enabled=true \

--set gatewayAPI.enabled=true \

--set gatewayAPI.enableAlpn=true \

--set gatewayAPI.enableAppProtocol=true

After about a minute, check the state of Cilium by running cilium status --wait

❯ cilium status --wait

/¯¯\

/¯¯\__/¯¯\ Cilium: OK

\__/¯¯\__/ Operator: OK

/¯¯\__/¯¯\ Envoy DaemonSet: OK

\__/¯¯\__/ Hubble Relay: disabled

\__/ ClusterMesh: disabled

DaemonSet cilium Desired: 3, Ready: 3/3, Available: 3/3

DaemonSet cilium-envoy Desired: 3, Ready: 3/3, Available: 3/3

Deployment cilium-operator Desired: 1, Ready: 1/1, Available: 1/1

Containers: cilium Running: 3

cilium-envoy Running: 3

cilium-operator Running: 1

Cluster Pods: 4/4 managed by Cilium

Helm chart version: 1.16.5

Validate that the cilium gateway class was created by running kubectl get gatewayclass/cilium

❯ kubectl get gatewayclass/cilium

NAME CONTROLLER ACCEPTED AGE

cilium io.cilium/gateway-controller True 3m47s

Now I’ll create the BGP control plane manifests for CiliumBGPClusterConfig, CiliumBGPPeerConfig, CiliumBGPAdvertisement, and CiliumLoadBalancerIPPool

# bgp-control-plane.yaml

tee infra/cilium/bgp-control-plane.yaml << EOF > /dev/null

---

apiVersion: cilium.io/v2alpha1

kind: CiliumBGPClusterConfig

metadata:

name: cilium-bgp

spec:

nodeSelector:

matchLabels:

bgp: "65020"

bgpInstances:

- name: "65020"

localASN: 65020

peers:

- name: "udm-se-65000"

peerASN: 65000

peerAddress: 192.168.2.1

peerConfigRef:

name: "cilium-peer"

---

apiVersion: cilium.io/v2alpha1

kind: CiliumBGPPeerConfig

metadata:

name: cilium-peer

spec:

gracefulRestart:

enabled: true

restartTimeSeconds: 15

families:

- afi: ipv4

safi: unicast

advertisements:

matchLabels:

advertise: "bgp"

---

apiVersion: cilium.io/v2alpha1

kind: CiliumBGPAdvertisement

metadata:

name: bgp-advertisements

labels:

advertise: bgp

spec:

advertisements:

- advertisementType: "Service"

service:

addresses:

- LoadBalancerIP

selector:

matchExpressions:

- {key: gateway.networking.k8s.io/gateway-name, operator: In, values: ['my-gateway']}

---

apiVersion: "cilium.io/v2alpha1"

kind: CiliumLoadBalancerIPPool

metadata:

name: dev-core-lb-ip-pool

spec:

blocks:

- start: "192.168.254.10"

stop: "192.168.254.30"

serviceSelector:

matchExpressions:

- {key: gateway.networking.k8s.io/gateway-name, operator: In, values: ['my-gateway']}

EOF

Review this file as there a few items to pay attention to:

- For

CiliumBGPClusterConfig,.spec.nodeSelectorrequires labeling the nodes. - For

CiliumBGPClusterConfig,.spec.bgpInstances[*]needs to be configured for your router. - For

CiliumBGPAdvertisement,.spec.advertisements[*].selectorneeds to match the Gateway’s name (my-gateway), which I will create later. - For

CiliumLoadBalancerIPPool,.spec.blocks[*]can be configured with IP ranges or CIDRs ranges. - For

CiliumLoadBalancerIPPool,.spec.serviceSelectorneeds to match the Gateway’s name (my-gateway), which I will create later.

Apply a label to the nodes so that it aligns with CiliumBGPClusterConfig.spec.nodeSelector

kubectl label nodes --all bgp=65020

Apply ./infra/cilium/bgp-control-plane.yaml

kubectl apply -f ./infra/cilium/bgp-control-plane.yaml

Define a Gateway and HTTPRoute manifests

# gateway.yaml

tee ./infra/cilium/gateway.yaml << EOF > /dev/null

---

apiVersion: v1

kind: Namespace

metadata:

name: infra-gateway

labels:

name: infra

---

apiVersion: gateway.networking.k8s.io/v1

kind: Gateway

metadata:

name: my-gateway

namespace: infra-gateway

spec:

gatewayClassName: cilium

listeners:

- name: http

protocol: HTTP

port: 80

allowedRoutes:

namespaces:

from: All

EOF

Now apply the Gateway manifest to the cluster by running kubectl apply -f ./infra/cilium/gateway.yaml.

Review the created gateway and associated service by running the following: kubectl get -n infra-gateway gateway,svc

❯ kubectl get -n infra-gateway gateway,svc

NAME CLASS ADDRESS PROGRAMMED AGE

gateway.gateway.networking.k8s.io/my-gateway cilium 192.168.254.10 True 22s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/cilium-gateway-my-gateway LoadBalancer 10.107.142.196 192.168.254.10 80:30901/TCP 22s

Notice the Address and External-IP columns respectively. An IP address was pulled from CiliumLoadBalancerIPPool.

With the pieces in place, let us take a look at BGP’s status. For Cilium, run the following two commands:

cilium bgp peers && cilium bgp routes

❯ cilium bgp peers && cilium bgp routes

Node Local AS Peer AS Peer Address Session State Uptime Family Received Advertised

dev-clus-core-cp01 65020 65000 192.168.2.1 established 4m5s ipv4/unicast 1 2

dev-clus-core-cp02 65020 65000 192.168.2.1 established 4m3s ipv4/unicast 1 2

dev-clus-core-cp03 65020 65000 192.168.2.1 established 4m2s ipv4/unicast 1 2

(Defaulting to `available ipv4 unicast` routes, please see help for more options)

Node VRouter Prefix NextHop Age Attrs

dev-clus-core-cp01 65020 192.168.254.10/32 0.0.0.0 57s [{Origin: i} {Nexthop: 0.0.0.0}]

dev-clus-core-cp02 65020 192.168.254.10/32 0.0.0.0 57s [{Origin: i} {Nexthop: 0.0.0.0}]

dev-clus-core-cp03 65020 192.168.254.10/32 0.0.0.0 57s [{Origin: i} {Nexthop: 0.0.0.0}]

SSH into the router and review BGP’s status by running: vtysh -c 'show bgp summary' && vtysh -c 'show ip bgp'

# vtysh -c 'show bgp summary' && vtysh -c 'show ip bgp'

IPv4 Unicast Summary (VRF default):

BGP router identifier 192.168.2.1, local AS number 65000 vrf-id 0

BGP table version 84

RIB entries 1, using 184 bytes of memory

Peers 3, using 2169 KiB of memory

Peer groups 1, using 64 bytes of memory

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd PfxSnt Desc

192.168.110.31 4 65020 22595 22577 0 0 0 00:06:29 1 1 N/A

192.168.110.32 4 65020 22582 22537 0 0 0 00:06:27 1 1 N/A

192.168.110.33 4 65020 22579 22535 0 0 0 00:06:26 1 1 N/A

Total number of neighbors 3

BGP table version is 84, local router ID is 192.168.2.1, vrf id 0

Default local pref 100, local AS 65000

Status codes: s suppressed, d damped, h history, * valid, > best, = multipath,

i internal, r RIB-failure, S Stale, R Removed

Nexthop codes: @NNN nexthop's vrf id, < announce-nh-self

Origin codes: i - IGP, e - EGP, ? - incomplete

RPKI validation codes: V valid, I invalid, N Not found

Network Next Hop Metric LocPrf Weight Path

*> 192.168.254.10/32

192.168.110.31 0 65020 i

*= 192.168.110.32 0 65020 i

*= 192.168.110.33 0 65020 i

Displayed 1 routes and 3 total paths

From the router, also view the routing table by running netstat -ar

# netstat -ar

Kernel IP routing table

Destination Gateway Genmask Flags MSS Window irtt Iface

10.255.253.0 0.0.0.0 255.255.255.0 U 0 0 0 br4040

<REDACT> 0.0.0.0 255.255.252.0 U 0 0 0 eth9

<REDACT> 0.0.0.0 255.255.255.192 U 0 0 0 eth8

192.168.2.0 0.0.0.0 255.255.255.0 U 0 0 0 br0

192.168.10.0 0.0.0.0 255.255.255.192 U 0 0 0 br10

192.168.20.0 0.0.0.0 255.255.255.128 U 0 0 0 br20

192.168.22.0 0.0.0.0 255.255.255.128 U 0 0 0 br22

192.168.24.0 0.0.0.0 255.255.255.128 U 0 0 0 br24

192.168.90.0 0.0.0.0 255.255.255.128 U 0 0 0 br90

192.168.100.0 0.0.0.0 255.255.255.128 U 0 0 0 br100

192.168.102.0 10.255.253.2 255.255.255.192 UG 0 0 0 br4040

192.168.104.0 10.255.253.2 255.255.255.192 UG 0 0 0 br4040

192.168.108.0 0.0.0.0 255.255.255.128 U 0 0 0 br108

192.168.110.0 0.0.0.0 255.255.255.128 U 0 0 0 br110

192.168.254.10 192.168.110.31 255.255.255.255 UGH 0 0 0 br110

Note the last line in the table that shows the IP address from CiliumLoadBalancerIPPool and the next-hop IP address, which is one of the Kubernetes nodes.

Things are shaping up nicely! 😎

Workload testing

Let me define a test deployment and service using the traefik/whoami container image.

# whoami.yaml

tee ./whoami.yaml << EOF > /dev/null

---

apiVersion: v1

kind: Namespace

metadata:

name: whoami

---

apiVersion: gateway.networking.k8s.io/v1

kind: HTTPRoute

metadata:

name: httproute-whoami

namespace: whoami

spec:

parentRefs:

- name: my-gateway

namespace: infra-gateway

sectionName: http

rules:

- backendRefs:

- name: whoami

port: 80

---

apiVersion: v1

kind: Service

metadata:

name: whoami

namespace: whoami

labels:

app: whoami

service: whoami

spec:

ports:

- port: 80

name: http

selector:

app: whoami

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: whoami-http

namespace: whoami

spec:

replicas: 3

selector:

matchLabels:

app: whoami

template:

metadata:

labels:

app: whoami

spec:

containers:

- name: whoami

image: traefik/whoami

ports:

- name: web

containerPort: 80

EOF

Deploy the workload by running kubectl apply -f ./whoami.yaml, then validate that the pods are running across all nodes by running: kubectl get pods -n default -o wide

❯ kubectl get pods -n whoami -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

whoami-http-68965c9df8-4kqw4 1/1 Running 0 48s 10.244.1.209 dev-clus-core-cp03 <none> <none>

whoami-http-68965c9df8-gzhjj 1/1 Running 0 48s 10.244.0.21 dev-clus-core-cp02 <none> <none>

whoami-http-68965c9df8-xj4wl 1/1 Running 0 48s 10.244.3.248 dev-clus-core-cp01 <none> <none>

From your workstation, let us get the IP address for the Gateway, and then try to curl the /api endpoint.

❯ GATEWAY=$(kubectl get -n infra-gateway gateway my-gateway -o json | jq -r '.status.addresses[].value')

curl -s http://$GATEWAY/api | jq '.'

{

"hostname": "whoami-http-68965c9df8-gzhjj",

"ip": [

"127.0.0.1",

"::1",

"10.244.0.21",

"fe80::9482:c0ff:fecb:938a"

],

"headers": {

"Accept": [

"*/*"

],

"User-Agent": [

"curl/8.7.1"

],

"X-Envoy-Internal": [

"true"

],

"X-Forwarded-For": [

"192.168.20.93"

],

"X-Forwarded-Proto": [

"http"

],

"X-Request-Id": [

"2cffe316-36b5-42aa-87fe-48d01837cc38"

]

},

"url": "/api",

"host": "192.168.254.10",

"method": "GET",

"remoteAddr": "10.244.3.205:43423"

}

Let us try a series of curl commands by running:

❯ for i in $(seq 0 11); do curl -s http://$GATEWAY/api | jq '.hostname'; sleep 1; done

"whoami-http-68965c9df8-4kqw4"

"whoami-http-68965c9df8-4kqw4"

"whoami-http-68965c9df8-xj4wl"

"whoami-http-68965c9df8-gzhjj"

"whoami-http-68965c9df8-xj4wl"

"whoami-http-68965c9df8-4kqw4"

"whoami-http-68965c9df8-xj4wl"

"whoami-http-68965c9df8-xj4wl"

"whoami-http-68965c9df8-gzhjj"

"whoami-http-68965c9df8-gzhjj"

"whoami-http-68965c9df8-gzhjj"

"whoami-http-68965c9df8-4kqw4"

Sweet! I can access all pods over the LoadBalancer IP address, which Cilium advertised into UniFi’s route table.

Let me clean up, as I want to tweak this solution further.

kubectl delete -f ./whoami.yaml

kubectl delete -f ./infra/cilium/gateway.yaml

Conclusion

In this article, I went over how to set up UniFi’s latest OS, which includes a UI for configuring BGP instead of hacking the router. I also showed how to enable and configure Cilium’s BGP and Gateway API features to complete the BGP configuration and create a simple ingress into a Kubernetes cluster. In my next article, I will explore setting up cert-manager and improving the Gateway configuration to create a reverse proxy using Cilium.